Technology can be manipulated to do anything from within its range of realistic possibilities that those who own the technology want it to do. For instance, one can write a Python program that says, “Live and let live.” One can also write a Python program that says, “Feminists are bad.” Is it the program itself or those who wrote the program who are to be blamed for spreading hate?

While these are simple examples of the use and misuse of technology, advanced technology and artificial intelligence have a lot of power and capacities that surprise even experts in the field, which means that the extent to which it can be misused is also equally scary.

Many who believe that artificial intelligence, technology and science do not discriminate against marginalised people might not have enough understanding and awareness of these fields. The question arises, why do scientists and technology enthusiasts work for capitalists, thereby contributing to capitalism? Research in any area needs funding because how else could one get all the resources? And who can fund massive research, buy prototypes and algorithms that can change the world, and get legal ownership of new cures for pandemics? Of course, the billionaire businessmen, for whom, everything, including pandemics and wars, are business opportunities.

Social media doesn’t restrict misogyny and queerphobia

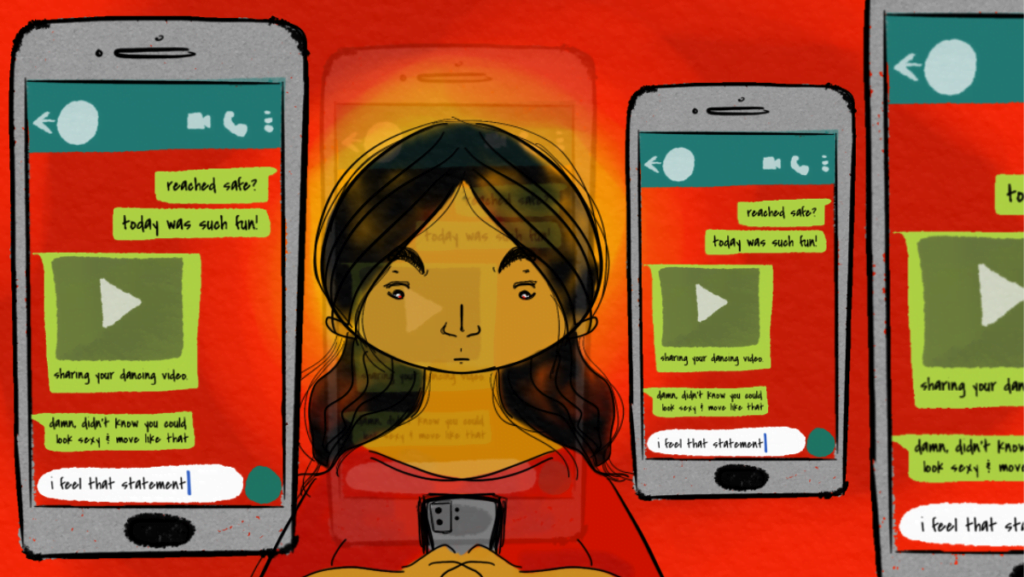

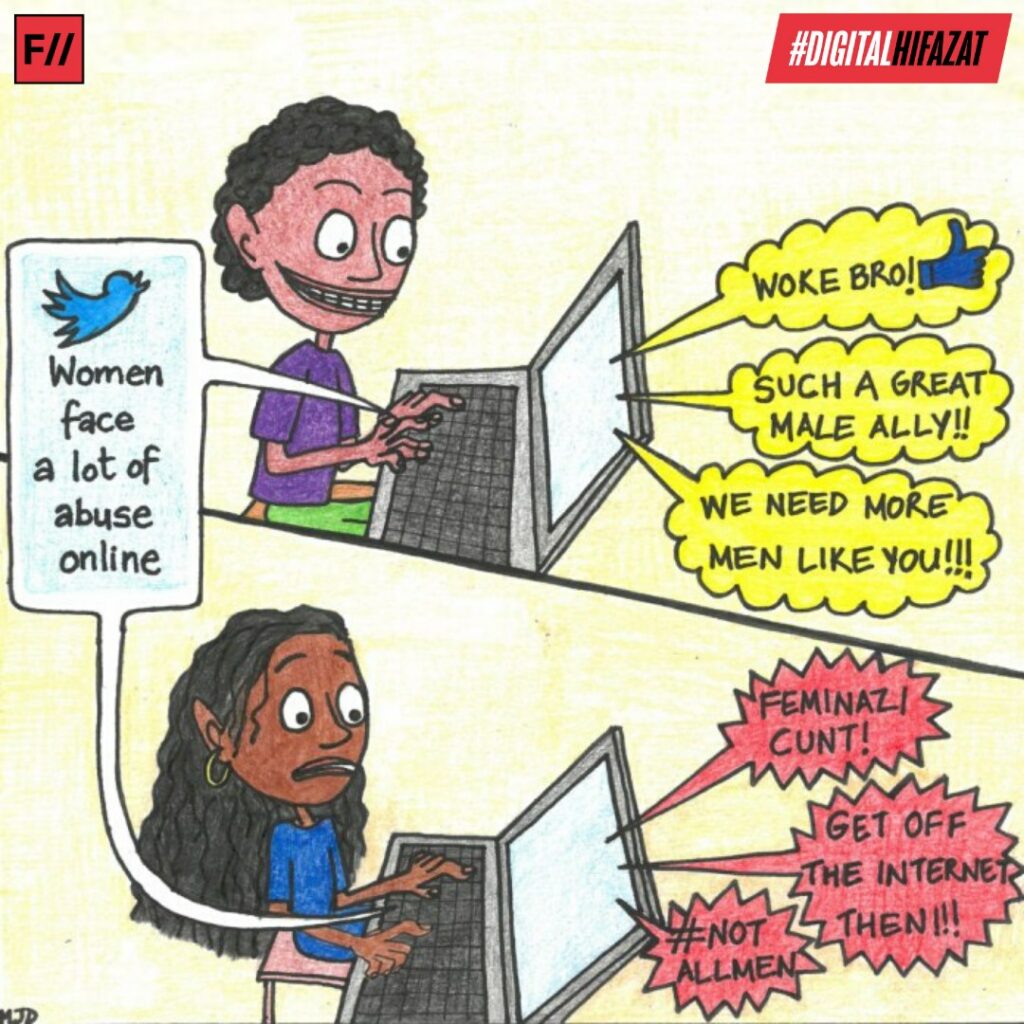

Social media is exciting for millions of users for several reasons. While these apps keep us entertained with some active feed, each of which is short tweets, quotes, videos, reels, memes, images or attractive selfies, users have the freedom to say almost anything they want. And that is not always a good thing because harassment and hate speech have become rampant.

Often, these haters are patriarchal men and boys, like some bored online troll with no purpose in life, or those who only have something to gain by being blatantly misogynistic, like Andrew Tate, or those whose hate for women and queer folks flow freely because they are confident that they have some strong political power or a cult backing them up, so nobody can hold them accountable. The victims in such cases are often women and queer folks.

Trolls and bored people who love to hate feminism and queer folks use every opportunity they can get to engage in cyberbullying and slut-shaming. Take the case of Kerala’s first trans-body-builder, Praveen Nath’s suicide caused by cyber-bullying, or the latest case of Deepika Padukone being openly harassed for speaking about her past, which was something that even her partner did not mind.

All these apps are powered by advanced data analysis and artificial intelligence tools, and that is how they help (suggestions) people with similar interests and values in a country or location to connect. This can be very helpful for queer folks and marginalised communities because finding other people of their kind is often truly relieving for them, and connecting with queer support groups and activists becomes easier, something they would need much at some point in their lives.

But the same advanced technology that helps the marginalised find and seek comfort and shelter also helps trolls, haters, and misogynists come together. With their social privilege, with no law or conditions on these apps to control misogyny, vile comments and abuse are quite common.

How does AI work?

Artificial intelligence is nothing without data and brilliant algorithms. Take ChatGPT for example – it is as powerful as it is because it is built using huge volumes of data on almost any topic you can think of, combined with a powerful machine learning algorithm called GPT-4. Deep learning models are built using large amounts of data in the form of text or images or other types of data, and these models can do things easily, free of cost, things that would have been almost impossible for the common people to do.

In the case of deep-fake videos, auto-encoders and generative-adversarial networks (GANs) are used. For this to work effectively, the technology needs a lot of data, and because lots of celebrity figures’ photographs are easily available online for free, celebrities become easy targets.

How can Advanced Technology impact women and gender minorities?

AI is not always a dangerous, scary power. Some robots dance to entertain people – how will it help humanity? They would do nothing more than entertain you. Some robots can mimic human behaviour, too. Some apps are built to help women and queer people, that ensure the safety of users by connecting them with their most-trusted friends or queer dating apps.

If the question is whether this splendid technology can affect women and people of gender minorities, then yes, it certainly can. There was this case where a young Indian man named Neeraj Bishnoi developed an app that offered Muslim women “on-sale,” on a fake auction.

In Saudi Arabia, an app called “Absher” was built, which facilitates tracking of women’s and girls’ activities for men who are their “guardians,” by helping them track women’s movements and activities using their national IDs and/or passports. These “guardians” can thereby prevent women who try to escape from places of abuse from seeking asylum, by giving the control solely to men, who get to decide whether they would approve or not of their female family members’ travel anywhere.

What is even more shocking is that these apps that were built to control or shame women were available on platforms like Google Apps and Apple – these big brands carelessly approved them!

How can AI impact women and other gender minorities?

According to Adam Zewe, generative AI can inherit and proliferate biases that exist in training data, or amplify hate speech and false statements. The models can plagiarise and generate content that looks like it was produced by a specific human creator, raising potential copyright issues.

There are two major ways in which such advanced technology and AI can be harmful to the persecuted. If the data used to build a deep-learning model is heavily biased/skewed, whatever the generative AI would produce would in turn be biased. Another major concern should be about consent – was the data obtained with consent, and are people represented in the AI outputs comfortable with that output before it gets circulated or even created? Often, consent is no big deal for someone who intends to misuse and harass.

With the right technology and/or the money that such technology would cost in the wrong hands, an abuser can easily use AI to generate almost anything they want it to. You can easily create a fake video of the Pope saying that Jesus was originally born in New York.

In the case of women and gender minorities, with sexual abuse and hate being common, anyone can generate videos and/or images with the intention being to sexualise or body-shame them. And it goes without saying how easily these outputs of AI will go viral in just a few hours.

One recent example would be the AI-generated deep-fake video showing a fake video resembling the actor Rashmika Mandanna. If such acts go unpunished or will be excused, that would only encourage more abusers to create content that they think will excite other perverted and abusive users on social media.

Owners of social media apps like Twitter, Instagram, TikTok, Facebook, and YouTube just don’t care about harassment and abuse. As long as millions of users are actively glued to their apps, they can continue to make money, and that is all they care about. Rarely are misogyny and online sexual harassment punished. By not punishing abusers and people who misbehave, these apps nurture patriarchy and queerphobia even more.

The speed at which AI is growing is quite scary, so scary that even popular technology enthusiasts like Elon Musk came together to call for a pause on the research of artificial intelligence because they all agreed that it can be a threat to humanity. Well, what about online harassment and abuse of technology that happens every day on apps they own and use? Entrepreneurs like Musk and Zuckerberg don’t seem to have the slightest interest or concern, just like most other politicians, even if they claim to care about humanity.

The problem is not technology itself, for any piece of technology can be misused. The questions one must ask are in whose hands such technology lies, what kind of biases does a model bring with it, was the data acquired with consent, to what extent can the harm be, are there laws in our country and across the world to regulate the use of such technology and prohibit misuse of technology, why politicians and law enforcement officers don’t care about abusers online, and why social media owners are still not held accountable by courts for all the abuse their applications regularly permit.

That is why there is this urgent need to take ethical AI more seriously; people with knowledge of advanced technology and artificial intelligence who are also aware of gender issues and social injustice need to work with the government, the board groups of social media apps, and the department of law to ensure that AI is not misused.

About the author(s)

Lakshmi Prakash is a data scientist. She sees practicing equality, humanity, and science as the only possible path to a progressive future and holds that the absence of any one of these will negate any growth in other areas. Her hobbies include reading fiction and non-fiction, learning something new, brisk-walking, cycling, and writing a chapter only to forget where she saved it.