The world’s skewed gender bias is no longer gasping news. It is rigidly handcuffed into the social fabric through stereotypes and traditionally upheld norms. Such systems create divisive spaces, both socially and digitally. While these voids are glaringly visible in the socio-political vantage, they remain latent in the emerging futuristic technologies, primarily machine learning with Big data. With an enormous proportion of the population using online, digital services and networks today, we create several gigabytes of data every day. This in turn, is fueling the AI algorithms to become smarter with enhanced precision and quality outputs.

We are beginning to rely more heavily each day on algorithms globally for decision-making, concerning mortgage loan decisions, insurance risk, job shortlisting, assessments, setting bond amounts, sentencing recommendations, predictive policing, among so many others. More so, it now facilitates a majority of day-to-day experiences. It is estimated that AI will contribute about $15.7 Trillion to the world economy by 2030, out of which, about $6.6 Trillion will be delivered by increased productivity while the remaining $9.1 Trillion will be gained from consumption side-effects.

As exciting as the possibility sounds, our apprehensions should also remain intact, for this technology knows nothing about the socio-economical inequalities of our world. And as it begins to impact the lives of individuals, it becomes an ethical necessity to scrutinize what and who is responsible.

We have steadily moved from assisted or augmented intelligence, which assisted humans in their tasks, to more automated and autonomous systems, eliminating the need for human supervision altogether. As exciting as the possibility sounds, our apprehensions should also remain intact, for this technology knows nothing about the socio-economical inequalities of our world. And as it begins to impact the lives of individuals, it becomes an ethical necessity to scrutinize what and who is responsible. Historical theorizing about the gender-technological relationships asserts that they are co-produced, something that will be discussed later in this article.

Ethical AI insists on placing the significance and rights of an individual over the larger utility spectrum of any digital product. Individual definitions of fairness largely manage ethics and governance of the field. Or reasonably, by the definitions of the creators and the powerful managers. Hence, transparency and accountability become vital to its feature. With growing trust and preference over futuristic technologies, these values need to be more rigorously embedded and regulated in audit frameworks. The decision-making process should always be plain-spoken and undisguised with a sense of understandability that is universal.

Also read: Women And Tech: Addressing Gender Gap Through Feminist Technology

AI Applications and Gender Prejudices

A nuanced sociological exploration tells us how AI applications are disseminating gender prejudices to their users. It was definitely not coincidental that all the AI assistants have historically been women’s voices by default, take Microsoft’s Cortana, Apple’s Siri, Google Assistant and now Amazon’s Alexa. Late 20th-century tech researchers attributed this growing stereotype to some studies (which were later refuted) claiming that women’s voices were more intelligible because of the high-pitch. This eventually even led to the creation of an entire industry of telemarketers and telephone operators dominated by women.

Now, this trend is justified by studies saying audiences responded better to a female’s voice, describing them as “sympathetic and pleasant” while better suiting the ‘image of a dutiful assistant’. Similarly, back in 2016, when Google tried to launch its new assistant in both male and female voices, it could not because there were no prior male-voiced training tools. It was explained that all the precursory text-to-speech systems had been trained only on female voices and hence performed better only with them.

Professor Safiya Noble, an expert on the topic, has repeatedly quoted that the growing socialisation with female virtual assistants is rapidly diminishing the significance of women to “a gendered female who responds on-demand”. Moreover, when we ask the symbolically, young, female assistant encoded in the system to perform limited functions like booking flight tickets, setting reminders, creating monthly calendars, weather reports, etc., it learns those as primal tasks. Hence, going forth its widespread approval grows for such missions only. Something rather funny is that one of the world’s fastest supercomputers, IBM Watson, is used to make complex medical decisions and play quiz shows instead of setting alarms, that too in the voice of the applauded ‘male’ Voice Artist, Jeff Woodman.

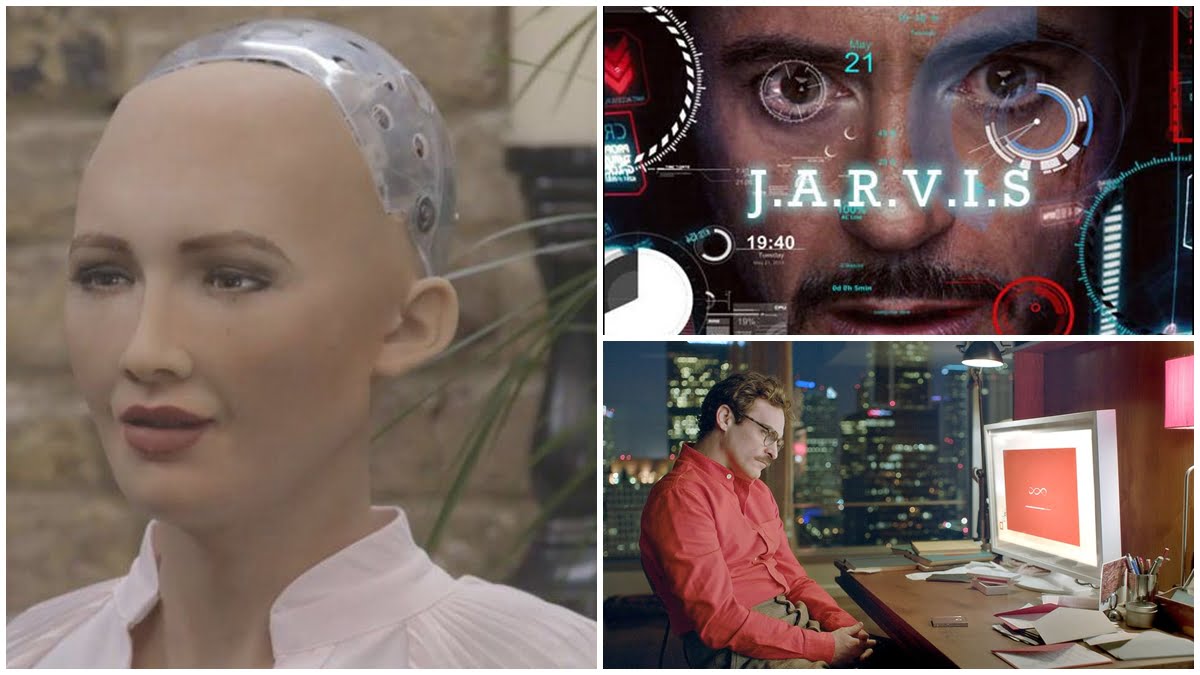

Talking of media representations of such stereotypes, JARVIS, the popular AI assistant to Tony Stark in Marvel’s Avengers and Iron Man movies (2008-2013) is companion-like, instead of a servient automated system. JARVIS helps him save the world and actor Paul Bettany voices him. On the other hand, Samantha, a female AI assistant, voiced by Scarlett Johansson in the movie, Her (2013), talks about relationships and plans dates for the protagonist played by Joaquin Phoenix. A classic stereotyping of provider/protector and caregiver role!

Following the heteronormative suit, the humanoid female bot, Sophia too, declared the desire of having a baby and a family, roughly a month after her interaction with the real world. She was even accorded more freedom and rights as a Saudi Arabia’s citizen than a human woman. It is strange how a female bot can have more independence than women and foreign workers in a country and yet be conditioned to comply with generations-old notions of a heterosexual family unit so quick.

Both these biases primarily result from the same cause, which is gender bias of the human mind, historically reproduced, recorded and embedded in us. And while this article looks at broadly two ways this prejudice perpetuates, there can be several vantages to this question. But more importantly, both of these representations of the skew revolve in a vicious cycle wherein each one produces the other one. Real-world bias seeps into Data Bias (evident through algorithmic biases), which in turn is brought to action by the critical decisions in business, medicine, law and order etc., hereby generating distorted trends of the same biases to begin with. Thus, the fact that 92.9% of the secretaries and administrative assistants in the USA were women in 2020, with 83.8% of them being whites, does not strike much as a surprise.

A study conducted by PwC in 2018 about the perception of AI and its tools found that almost 61% of its respondents consisting of working employees in metro cities in India favourably used these digital assistants, pointing out that it helps them through “event reminders” and in “managing their calendar”. Interestingly again, 74% of the respondents wanted their digital assistants to be “friendly”.

Alan Winfield, a robot ethicist, asserts that designing a robot with a gender is a deceitful act. He emphasizes that machines themselves cannot belong to a particular gender consequentially. Hence, when designed to coyly dismiss or submit to harassment, it sets up real women for further objectification. Despite being able to make potential changes to overused expectations of gender groups, technologies still today seem to entertain women and bots as ‘almost-human beings with no mind of their own’.

Be it voice assistants, chatbots or virtual agents, by gendering an inanimate product, technology as a discipline is making way for recycling expected social behaviours in a very modernistic face of neutrality. Alan Winfield, a robot ethicist, asserts that designing a robot with a gender is a deceitful act. He emphasizes that machines themselves cannot belong to a particular gender consequentially. Hence, when designed to coyly dismiss or submit to harassment, it sets up real women for further objectification. Despite being able to make potential changes to overused expectations of gender groups, technologies still today seem to entertain women and bots as ‘almost-human beings with no mind of their own’. A stirring UNESCO and EQUALS Report, brought to light a pathetic spectrum of responses by Voice Assistants to verbal sexual harassment. The following table yields that the feminized robot goes on to even thank users for sexually inappropriate comments, trivializing the impact of cat-calling and verbal abuse faced by women every day. When these feminine VAs are presented to consumers as subservient objects at their disposal, technologists are abetting the perception of women as ‘objects’ for the larger society (See table below). In turn, this contributes to the continued trivialized presence of women in holding agency and decision-making.

Table: Voice Assistants (VAs) responses to Verbal Sexual Harassment

At the very base of the AI systems are algorithms, written and trained mostly by English-speaking, white, privileged men. While the biases of any kind are almost invisible in modern-day tech infrastructure, women lie ambiguously between not being entirely a minority group and neither being a privileged group. This puts gender-bias as one of the biggest globally-acknowledged threat.

Part 2: Gender Bias In Futuristic Technologies: A Probe Into AI & Inclusive Solutions

Mona is a researcher and feminist working at the intersections of gender, social policy and public health and writes contemporary stories using data. She holds a post-graduate degree in Women’s Studies from Tata Institute of Social Sciences and currently works with the Sustainable Development Programme at Observer Research Foundation. You can find her on LinkedIn and Twitter.

“2020, with 83.8% of them being whites, does not strike much as a surprise.” The word “whites”, in this context is offensive. The correct term is “white”.