The emergence of artificial intelligence heralded a never-before-seen, halcyon era, in which we predicted that processes in precarious fields like healthcare, transportation, and manufacturing would be strengthened, and the potential risk of incorrect diagnoses and machine failures would diminish due to the all-knowledgeable, analytical oversight of AI. Instead, it has been used for far more heinous purposes, from the dissemination of misinformation to large-scale surveillance to what seems to be its new low—undressing women on Twitter.

The Grok AI problem: a platform without guardrails

For the past two months, predominantly male Twitter/X users have been using Grok AI (X’s AI chatbot) to non-consensually produce sexually suggestive images of women on the platform. While other AI platforms are open to proliferating eroticism, with OpenAI planning to introduce ‘Adult Mode’ on ChatGPT, they usually have restrictions on inappropriate modification of images of existing persons. However, deepfake porn is by no means a recent trend—according to a 2019 study, an astounding 96% of deepfakes were of a pornographic nature, and 99% of people targeted in this image-based sexual abuse were women.

The abuse facilitated by Grok isn’t a bug—it’s a feature. Grok operates with Elon Musk’s Silicon Valley mindset of ‘move fast and break things’ and is designed to propagate free speech, an agenda that Musk himself has faced severe backlash for regarding the nature of his political opinions.

The abuse facilitated by Grok isn’t a bug—it’s a feature. Grok operates with Elon Musk’s Silicon Valley mindset of ‘move fast and break things’ and is designed to propagate free speech, an agenda that Musk himself has faced severe backlash for regarding the nature of his political opinions. The absence of safeguards on X was a deliberate manoeuvre by Musk which has turned the platform into a Wild West of image generation, with its victims being women whose pictures are readily available online.

The technical ease by which Grok can produce these images is alarming. Within a matter of seconds, a woman’s online reputation can be destroyed by a string of pictures in her likeness, depicting her in compromising, sexual scenarios she never approved of. The speed of image production and its integration within the platform means that all it takes is a quick sexually explicit request and the algorithmic amplification, designed to maximise the spread of shocking and sensational content, ensures a rapid dispersal of sexual imagery throughout the platform. Even when victims report such content, the moderation response has been inconsistent at best, with many reporting that their complaints go unaddressed while the violating images remain widely accessible.

The weaponisation of misogyny: high-profile intimidation cases in India

This trend has been concerning. Gauri Lankesh, a journalist and activist, was murdered outside her residence in 2017 for her leftist and anti-Hindutva ideologies upon receiving scores of online and offline threats, akin to the fate of woman journalists reporting from conflict and election zones in India. No doubt motivated by the BJP-established atmosphere of hatred and animosity, the amount of online vitriol spewed at women journalists has risen with the ascendance of the powers-that-be. A target of a particularly malicious form of this has been Rana Ayyub, an investigative and political journalist who published a book about the complicity of Narendra Modi and Amit Shah, the prime minister and president of the BJP, in a series of 2002 riots occurring in Western Gujarat. She also not only investigated a string of murders that Amit Shah was involved in from 2002 to 2006 but also writes regularly about caste hierarchies and minority marginalisation and violence. Rana Ayyub has been the subject of “an apparently coordinated social media campaign that slut-shames, deploys manipulated images with sexually explicit language, and threatens rape.” Falsely attributed quotes regarding child rapists and hatred of India have made the rounds of Indian media through several pro-Hindutva publications who care less about factual accuracy than they do about disseminating misogyny, whose nationalist audiences did not hesitate in unleashing a barrage of gang-rape threats, urging violence and cruelty towards the so-called ‘anti-nationalist’. However, then came a new low—a two-minute, 20-second pornographic video of a sex act with her face morphed onto another woman.

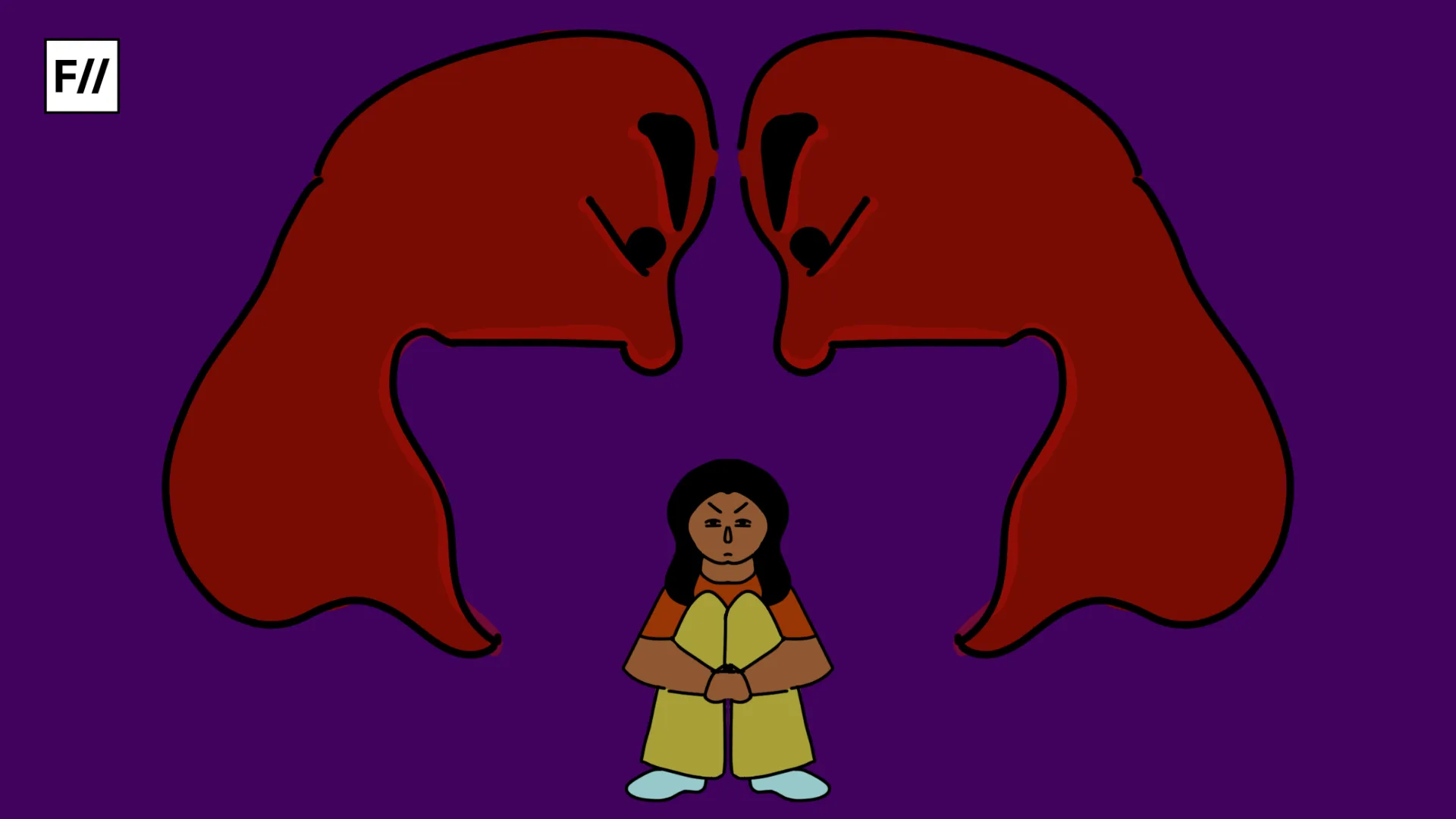

Misogyny has historically manifested in vindictive and violent forms. Attacks on a woman’s political stances often mutate into more sinister backlash about a woman’s sexuality, or specifically, her sexual impropriety, reminding her of her powerlessness as a woman and the fragility of her character. It is not enough for her to be branded as a Congress sell-out—she must also be ‘branded with the scarlet letter and paraded as a whore’. “The pornographic video ensures the greatest threat of all, the woman is made into a literal canvas for the perverse nature of the trolls, a grotesque erotica, to play out on; a video affirmation of the real position of the female in the Women, the State, and the Journalist hierarchy. Of course, the present of the sexual was a definite affirmation to the difference that gender inspires, but how far does it really go?”

The human cost: psychological and professional devastation

Deepfake images, the kind that Grok AI generated thousands of every hour, have severe social, psychological, and career-based implications for women. The purpose of generating such pictures has been the same as every online misogynistic endeavour in a patriarchal society—the hyper-sexualisation and public humiliation of targeted women. One of the most devastating facets of this online abuse is its inescapability—with such material garlanding the interwebs, one can never truly ensure such images aren’t stored in personal or public archives in perpetuity. The blow of this violation of privacy and dignity may be compounded by the issue of its online permanence.

Additionally, studies have found an increased likelihood of ‘depression, anxiety, non-suicidal self-harm, and suicidal ideation’ in women who have been targets of this form of sexual abuse. The consequences on professional life are equally destructive. In 2022, the International Review of Victimology found that in image-based sexual abuse, women’s employment and education experienced a direct impact. Women in customer service jobs described difficulty facing customers and concentrating at work. Fear of how others might perceive them or whether a stranger had seen their photos was a real issue victims of image-based sexual abuse had to face.

The choice before us is clear: either we impose real constraints on how AI can be used to harm others, or we accept a future where technological innovation serves as a breeding ground for misogyny, harassment, and abuse. There is no neutral ground, no technological determinism that absolves us of moral responsibility. Until platforms like X prioritise human dignity over unrestricted innovation and so-called freedom that comes at the expense of others, women will continue to pay the price for technology without conscience.